Neurotypical babies' social behavior takes off as their attention to social input plummets: Why we should be wary of autism theories based on attention to people vs. objects

During the second half of the first year, usually around nine months, most babies learn to share attention with others by alternating their gaze between another person and an object. Their repertoire of social gestures expands enormously, too. With such a rapid spurt of social development, typically developing babies must be paying more attention to people and less attention to other things in their environment, right?

As it turns out, when you measure what babies are actually looking at, the opposite is true.

Babies' visual environments

There are lots of theories about what babies learn from their environment, how much they can learn from the environment, and how much must be present innately. For example, is the language babies hear complex and varied enough to allow them to figure out the grammatical rules of language? Or, is an innate grammar-understanding part of the brain necessary to explain it (a "poverty of the stimulus" argument)? Do babies need lots of exposure to faces to learn to recognize them, or are they just born with a specific part of the brain that processes faces in a unique way that supports recognition? These issues are easy to speculate about, but hard to actually test: you need a way to measure what babies see and hear in their homes and communities. And until recently, this was very hard to do.

One particularly important aspect of babies' environments is the other people they see, particularly their faces. Faces are a particularly important part of the visual environment because they are vital for recognizing others, contain emotional and social information, and convey cues that are helpful for language perception (e.g., mouth movements). So, Swapnaa Jayaraman, Caitlin Fausey, and Linda Smith [1] wanted to measure the faces babies saw during their first year, at home and in the community. How often did they see faces? Whose faces did they see? How close up were they, and what parts were visible? Did these variables change as babies grew?

They measured the faces babies saw using small head cameras mounted on top of a hat, which babies wore1. The cameras showed a broad view of whatever was directly in front of the child's head. Parents were shown how to use the camera and asked to try to capture 4-6 hours of video during a variety of daily activities while the baby was awake and alert. They were given up to two weeks to make the recordings.

Above: a) A baby at home, wearing the head camera mounted on a hat. b) Example frames coded during data analysis.

From Jayaraman, Fausey & Smith (2015).

Families of 22 babies ranging from 1 to 11 months old participated, providing over 100 hours. Most of the recordings were in the child's home (84%), but some took place outdoors or in group settings (11%), in the car (4%), or other locations (2%), such as during errands. From this data, one still frame per five seconds of video was coded (a total of 72,150 frames). Coders looked for the following:

Is there a face, or part of a face, in the frame?

Whose face is in the image?

Estimated distance of the face from the head camera. This estimate was made by comparing the face or face parts in the frame with templates created by filming a female face at increments of 1 foot (1 foot, 2 feet, etc.) from the camera.

Are both eyes visible?

Smith's team wanted to know how many faces babies saw, to determine how much experience they actually had looking at faces. They also wanted to know how close-up and clear the faces would be, because quality might matter as well as quantity. Further, they wanted to know how many people's faces babies saw. If babies only saw a few faces, such as those of family members, they might get very good at recognizing their family's and similar-looking faces, but might be less skilled at recognizing very different-looking faces. Lastly, the researchers wondered how these variables changed as babies learned to crawl and walk, and their visual acuity improved.

Smith's team found that babies saw an average of 8 unique people during the videos--but the number ranged from 2 to 20. The number of different faces babies saw wasn't related to age. However, the most frequently-viewed faces (generally parents) appeared proportionally less often for older babies. (This was true for the most frequent, two most frequent, and three most frequent faces).

Babies are born with very poor eyesight, which gradually gets more acute. So, not surprisingly, faces were under two feet from the youngest babies, and higher distance correlated with greater age.

Regardless of age, babies typically saw faces from the front, with both eyes visible. Thus, they usually get fairly high-quality views of faces.

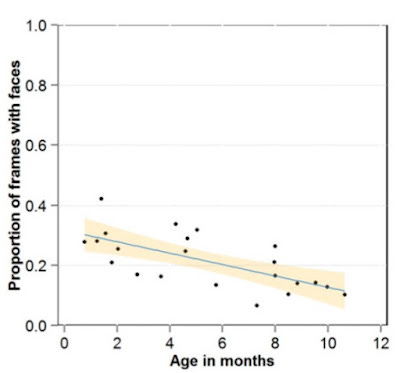

There was one surprising finding: The proportion of frames containing faces dropped dramatically with age. The youngest babies saw faces nearly 15 minutes out of every recorded hour, while the oldest saw them only about 5 minutes per hour. So as babies are becoming more competent at social interaction behaviors, they are actually getting less exposure to social stimuli.

Above: The proportion of frames containing faces drops by about half between 1 and 11 months.

From Jayaraman, Fausey & Smith (2015).

A brave new world of objects

So, if older babies are seeing fewer faces, what are they looking at instead? Another head camera study by the same research team [2] suggests an answer.

Smith and colleagues compared head camera views from six 1-3 month old babies, who could neither sit independently nor hold objects, with five 7-9 month olds, who could crawl and manipulate objects. Forty-four hours of video were sampled every 5 seconds. Younger babies saw mostly faces, ceiling, and walls, with very few objects. By contrast, older babies typically saw hands, objects within close reach, and floors. In order to see objects, younger babies have to have these objects placed within their view by others, or the objects must be coincidentally present near where the babies are lying or being held. But when babies learn to crawl (typically around six months), they spend a great deal of time on the floor and can maneuver themselves within reach of many objects.

Objects, not faces, dominate the visual worlds of babies at the very age they are developing joint attention and social gestures.

Researchers in my department are highly aware of this dynamic, and argue that a central problem for babies in the second half of the first year is to balance the competing demands of attending to others with attending to objects. Because attention control is still limited at this age, this is a big challenge.

This increased focus on objects and decreased focus on faces should not be assumed to reflect a lack of social motivation by these typically developing babies (as would be assumed if they were autistic). Rather, developing motor skills are changing what babies see, and therefore what they can attend to.

How do typically developing babies solve the problem of maintaining social engagement in a world of fascinating objects? They use cues produced by others interacting with objects to share attention. Parents' hand movements are coordinated with their eye gaze, and babies can use these movements to determine what parents are attending to. In so doing, babies consistently look at the same object at the same time as parents, an experience that may allow them to appreciate shared attention before they start using eye gaze or facial expressions as a cue.

Implications for social attention theories of autism

Some researchers speculate that autistic children are biased to look at "nonsocial" stimuli, such as objects or interesting geometrical patterns, more than faces early in life. This in turn limits their exposure to faces and the information they convey, potentially delaying their social development.

Ami Klin's team--well-known proponents of this explanation of autism--even argued that whether male "high-risk siblings" were later diagnosed autistic themselves could be predicted based on how much they looked at the eyes while watching short video clips of a female caregiver. Although this study was widely reported in the media, Jon Brock points out a number of fatal flaws with this study--including that the trajectories of the later-diagnosed-autistic and low-risk control groups did not actually differ until the final test session at 24 months. When the analyses are carefully restricted to 2-6 month olds only, boys who developed autism showed declining eye gaze while low-risk controls showed increasing eye gaze--but this can be explained simply by the fact that the high risk babies had higher eye gaze than the controls to begin with.

So, there is plenty of reason to be skeptical about this account of autism even on its own terms.

But it's the research with typically developing babies that truly suggests we should take social attention theories of autism with a large dose of salt. Typically developing babies are reducing their attention to faces and increasing their attention to objects, so if the autism theorists are right, their social development should decline. In fact, it soars. Moreover, rather than distracting babies from social engagement, objects and the hands that manipulate them offer new ways to share attention with others. If attention to objects over faces doesn't necessarily impair social development in neurotypical children, there is no reason to assume that it does in autism, either.

Too often, researchers assume a specific trait, such as social disability in autism, and then reach backwards looking for something to explain it. Or, they might see two traits--social disability and avoidance of eye contact--and link them together, because intuitively, eye contact seems related to social functioning. This is not good science, and the flaws of this approach become especially obvious when it is done without reference to how the trait typically develops, as happened here.

To determine the real explanation, we need to use head camera measures like these, along with eye tracking, to better understand what autistic babies are seeing and doing during this crucial developmental stage. How does this compare with typically developing babies? Are they seeing the same amount and type of faces? Do they see fewer faces and more objects during the second half of the first year, too--or might delays in motor development affect this pattern? Do autistic babies also use others' manipulations of objects to share attention and maintain social engagement? If there are differences here, whatever they are, they are likely to be more subtle and interesting than any social attention theories that have so far been proposed.

References

[1] Swapnaa Jayaraman, Caitlin M. Fausey, and Linda B. Smith (2015). The faces in infant-perspective scences change over the first year of life. PLoS ONE vol. 10 iss. 5, e0123780. Open access PDF.

[2] Swapnaa Jayaraman, Caitlin M. Fausey, and Linda B. Smith (2013). Visual statistics of infants' ordered experiences. Meeting abstract presented at Vision Science Society 2013.

1 A limitation of head cameras is that the head and eyes are not always perfectly aligned, although they usually are--especially for babies. Another limitation is that they miss peripheral information; however, babies' central vision is much more acute and probably more used anyway.