How can you measure a non-speaking person's understanding of language?

The autism community has become increasingly concerned about understanding people with no spoken language, and often, severe developmental delays. Such people have previously been excluded from many research studies, in favor of more convenient subjects. However, psychology offers a variety of tools for learning about the mental life of people who cannot speak, most of which involve measuring eye movements. Some of these, such as habituation/preferential looking, come from research with babies. This makes sense, because working with babies means making inferences about the thoughts of people with little or no speech or gesture. Surprisingly, another paradigm comes from research on how adults interpret language, and is called the "looking while listening" or "visual world" paradigm.

Visual World Paradigm

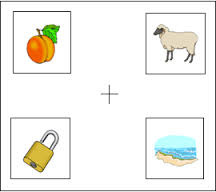

Example of the visual world paradigm, using the words "peach," "beach," "sheep," and "lock."Back in 1974, a researcher named Cooper measured people's eye movements while they listened to short narratives and looked at pictures of common objects. Some of the objects were referred to in the spoken narrative, while others were not. Participants were told their pupil size was recorded, but they could look anywhere they wanted. The listeners tended to look at objects that were being mentioned in the narrative--either while the word was still being spoken, or within about 200 ms after it [4]. In the 1990's, when eye movements became easier to measure, Tanenhaus's research team found something similar. The method, dubbed the "visual world paradigm," quickly took off [4].

While people do not consciously choose to look at the objects they are thinking about, and may not even realize they're looking at them, their eye movements still reflect their thoughts. That makes the visual world paradigm attractive for understanding language comprehension.

Researchers used this method because they were interested in how people's language abilities interacted with their non-language ones (such as the ability to recognize objects)[4]. They were also interested in when and how we distinguish a word from other, similar-sounding words. While it feels like one recognizes short words all at once, they actually take several hundred milliseconds to say. During this time, information about the word gradually accumulates, and your brain uses it to predict what word the person is saying. The visual world paradigm can be used to measure how long it takes to distinguish a word (e.g., "beaker") from another word that sounds the same at first (e.g., "beetle"), as opposed to a rhyming word (e.g., "speaker") or a completely unrelated one ("dolphin") [1]. The first study I ever worked on [2] used the visual world approach with these exact types of words, while measuring brain activity with EEG.

An important aspect of this method is timing. It doesn't just matter which picture people look at, it matters when they look at it. The timing for the eye movement data is matched up very precisely with the timing of the audio and the picture, or "time locked." Then one can say, for example, that at the point where the speaker has said "bea," the listener glances at both the beetle and the beaker, but by the time the word finishes, the listener is only looking at the beaker.

Looking While Listening

A simpler version of the visual world paradigm, the "looking while listening" procedure, is used to measure very young children's language comprehension [3]. Children look at a pair of pictures while listening to speech that names one of the pictures. Just as with visual world, their gaze patterns are measured and time-locked to the speech signal.

One difference is that only two pictures appear onscreen instead of four. Fewer distracters is suitable for very young children, or those with limited ability to control their attention. The pictures are also very carefully matched on how bright and interesting they are.

Researchers code where the child is looking during each trial a frame at a time. Trials are categorized depending on where children are looking when the word begins and ends [3].

For example, if a child starts out looking at the correct object (the "target") and continues looking at it the entire time the word is presented, this is a "target-initial" trial. This pattern reflects comprehension. If the child starts out looking at the distracter picture, but shifts during the word to looking at the correct picture, this is a "distracter-initial trial," and it also indicates comprehension.

Certain patterns indicate the child probably does not comprehend the spoken language presented, including:

The child starts out looking at the distracter picture and never shifts to looking at the correct picture.

The child starts out looking at the target picture and switches to the distracter.

Other patterns suggest that the child was not performing the task at all, and his or her comprehension can't be judged:

The child was looking somewhere between the pictures the whole time and not directly at either of them.

The child was looking away (not at the display at all) the whole time.

Data can be plotted continuously, like this, to see how children's comprehension gradually unfolds, and how it improves with age:

Above image is from Fernald and colleagues (2008) [3].

However, other measures are more intuitive and easier to compare between groups. Perhaps the most intuitive is accuracy, the proportion of time spent looking at the correct picture as opposed to the distracter (or anything else) [3]. If the child consistently looks at the labeled picture for most of the time, then he or she probably understands that the label refers to that picture.

Children look more consistently at the correct picture as they get older and develop higher language skill. Looking while listening comprehension might also reflect individual differences in language abilities in general. For example, typically-developing children with higher looking while listening comprehension at 25 months may have higher standardized language scores (CELF-4) at 8 years [3].

Using looking while listening with autistic children

Looking while listening has been used to measure young autistic children's language in their own homes [5]. Children view videos with two side-by-side images, accompanied by audio that only matches one of the images. If children understand which image matches the audio, they will look at it rather than the distracter. Videos were used instead of static pictures because these are better for measuring children's understanding of verbs, including who did what to whom. This video by Letitia Naigles and Andrea Tovar [5] show how the videos are designed and presented to children. It's a great example of how high-quality, well-controlled research can be done outside the lab, so participants can be comfortable.

Of course, this method isn't perfect. One question to be resolved is how well it works for people with difficulty making eye movements. Difficulty making controlled eye movements sometimes occurs in autism, and may be especially common in those with language impairments. The eye movements made during the looking while listening paradigm are not deliberate, so I am not sure if a person with difficulty making controlled eye movements would also have difficulty with these. If so, this task won't be suitable for every nonspeaking person. But no assessment method is suitable for everyone, and this is more accessible than most standardized tests.

Measuring people's understanding of nouns, verbs, and sentences is very far from understanding a person's experience. So, the looking while listening paradigm and similar methods do not completely solve the problem of understanding nonspeaking people. However, they might help with presuming competence and offering an appropriate education. When we can actually measure whether a nonspeaking person can understand what we say to them, we no longer have any excuse for assuming they do not understand. Most likely, many nonspeaking autistic people will demonstrate comprehension beyond what their parents or teachers predicted.

References

Paul D. Allopenna, James S. Magnuson, and Michael K. Tanenhaus (1998). Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language vol. 38, pp. 419-39.

Amy S. Desroches, Randy Lynn Newman, and Marc F. Joanisse (2009). Investigating the time course of spoken word recognition: Electrophysiological evidence for the influences of phonological similarity. Journal of Cognitive Neuroscience vol. 21, iss. 10, pp. 1893-1906. Full text HTML.

*Anne Fernald, Renate Zangl, Ana Luz Portillo, and Virginia A. Marchman (2008). Looking while listening: Using eye movements to monitor spoken language comprehension by infants and young children. In: Irina A. Sekerina, Eva M. Fernandez, & Harald Clahsen (editors), Developmental Psycholinguistics: On-line methods in children's language processing xviii, pp. 97-135. Open access PDF.

*Falk Huettig, Joost Rommers, and Antje S. Meyer (2011). Using the visual world paradigm to study language processing: A review and critical evaluation. Acta Psychologica vol. 137, pp. 151-71.

*Letitia R. Naigles & Andrea T. Tovar (2012). Portable intermodal preferential looking (IPL): Investigating language comprehension in typically developing toddlers and young children with autism. Journal of Visual Experimentation 70, e4331 (Paper & video). URL.